Our Hardware Infrastructure

This is a short overview of some of the interesting architectures and machines that are available at the Department of Scientific Computing, used both for education and research purposes.

EXA: Heterogenous Many-Core Cluster

EXA: Heterogenous Many-Core Cluster

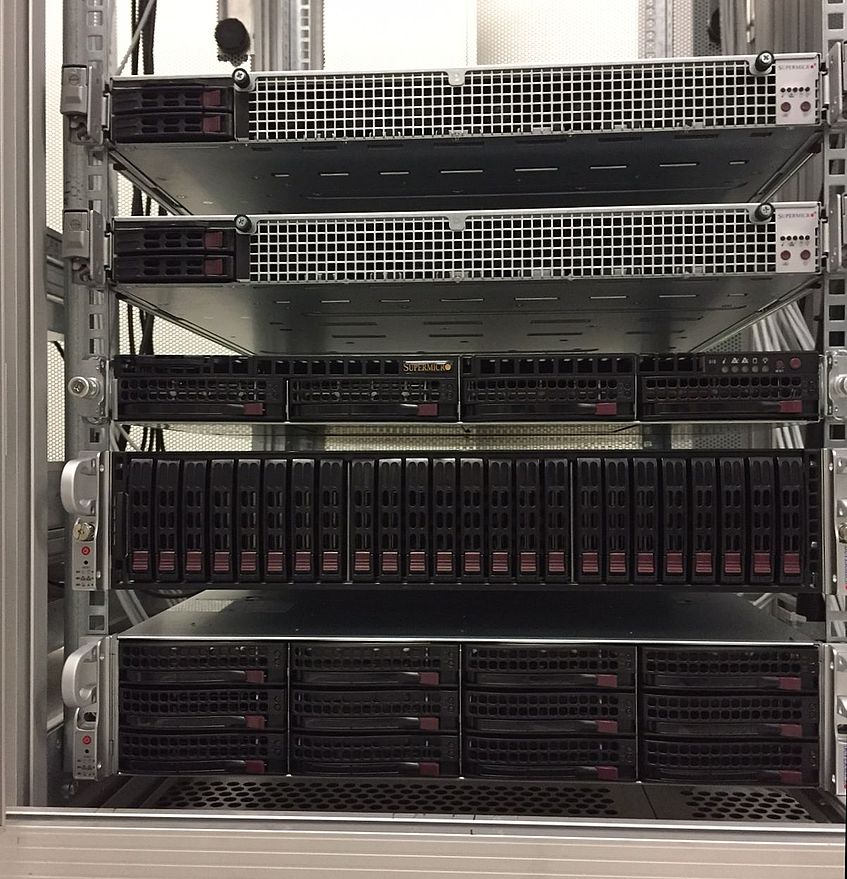

EXA is a compute cluster which comprises a frontend and 4 heterogenous computational nodes, all based on Supermicro SuperServer systems.

Node 1 features four Intel Xeon Gold 6138 20-core CPUs. Node 2 has two AMD Epyc 7501 32-core CPUS. Node 3 has 2 Intel Xeon Gold 6130 16-core CPUs and two Tesla V100 GPUs. Node 4 has 2 Intel Xeon Gold 6130 16-core CPUs and an AMD Radeon Instinct MI25.

Network capabilities include 10 Gigabit Ethernet and QDR Infiniband. The cluster runs Linux (CentOS). SLURM is used for resource management.

EXA has been put into service in October 2018 and is currently used for internal research.

PHIA: Xeon Phi and GPU Cluster

PHIA: Xeon Phi and GPU Cluster

PHIA is a compute cluster which comprises a frontend and 8 computational nodes. The nodes are SuperMicro 20127GR-TRF systems with two Intel Xeon Xeon E5-2650 2.0 GHz (Sandy Bridge) and 128 GB RAM.

There are six heterogenous nodes with two Xeon Phi 5110P cards (60-Core, 1.053 GHz, 8GB) and two Nvidia Tesla K20 M-class cards. One node is configured with four of the Xeon Phi cards, another node has four Nvidai Tesla cards.

Network capabilities include Gigabit Ethernet and QDR Infiniband. The cluster runs Linux (RHEL).

PHIA has been put into service in April 2013 and is currently used for internal research.

CORA: GPU Cluster

CORA: GPU Cluster

The CORA GPU cluster comprises one frontend and 6+1 computational nodes with two Intel Xeon quad-core X5550 2.66Ghz CPUs and 24GB DDR3-1333 system memory. Each of the computational nodes is additionally equipped with two nVidia Tesla C2050 and one nVidia Tesla C1060 GPUs.

A single Tesla C2050 (Fermi architecture) comprises 448 Streaming-cores, 3GB GDDR5 device memory and a peak floating-point performance of 1.03 TFlop/s (single precision). A Tesla C1060 provides 240 Streaming- cores, 4GB GDDR3 device memory and a single-precision peak performance of 933 Gflop/s. The interconnection capabilities of CORA include Gigabit Ethernet and high performance QDR Infiniband.

In total, the CORA GPU cluster comprises 64 CPU-cores, 7952 GPU-cores and an aggregated main memory of 262GB. The system offers an available disk space of approx. 64TB. CORA has been put into service in February 2010, and was upgraded with Fermi GPUs in November 2010.

Since September 2013 it is used for teaching purposes.

NOVA: AMD Opteron™ 6172

NOVA: AMD Opteron™ 6172

The NOVA mini-cluster comprises 4 computational nodes with two 12-core AMD Opteron™ 6172 2.1GHz processors. Available system-memory is 48GB 1333MHz DDR3 per node. Networking interconnects include QDR Infiniband and Gigabit Ethernet.

In total, the system offers 96 CPU-cores with 192 GB DDR3 memory and 8 TB HDD storage. The system runs Red Hat Enterprise Linux 6 (64 bit), and has been put into service in March 2011.

MERET: Intel® Nehalem-EX

MERET: Intel® Nehalem-EX

MERET is an Intel® "Nehalem-EX" based system and comprises four Intel® Xeon® X7560 8-core processors (2.26 GHz, 24 MB L3) and 64GB of RAM. Each core provides 2-way SMT, bringing the total number of hardware threads supported by MERET to 64. The system runs Red Hat Enterprise Linux 5 (64 bit).

MERET was put into service in October 2010.

NADIA: General-Purpose computation on GPU (GPGPU)

NADIA: General-Purpose computation on GPU (GPGPU)

NADIA comprises two Intel Xeon X5550 Quad-Core processors with 24GB DDR3-1333 memory. Additionally NADIA is equiped with an NVIDIA CUDA enabled GeForce GTX 480, GTX 285 and an ATI Radeon 5870 GPU.

The Nvida GTX 480 GPU provides: 480 processor cores, 1536 MB GDDR5 device memory with bandwith of 177GB/s, 364-bit memory bus, 700MHz core frequency and 1400MHz unified processor frequency.

The Nvida GTX 285 GPU provides: 240 processor cores, 1GB GDDR3 device memory with bandwith of 159GB/s, 512-bit memory bus with 8 independent controllers, 648MHz core frequency and 1476MHz unified processor frequency.

The ATI Radeon HD5870 provides: 1600 stream processing units, GDDR5 interface with 153.6 GB/sec memory bandwidth and a single-precision peak performance of 2.72 TeraFLOPS.

The system runs Linux (RHEL x86_64).

DAWN: SPARC T3-2

DAWN: SPARC T3-2

This SPARC T3-2 Server is powered by the SPARC T3 processor in a two-socket system with 3 rack units. With 2 x 1.65 GHz 16-core processors (each capable of 8 simultaneous threads) it delivers up to 256 parallel threads. Memory size is 128 GB.

The system runs on Solaris 10 SPARC. It has been put into service in May 2011.

DAISY: Sun Fire X4600 M2

DAISY: Sun Fire X4600 M2

The Sun Fire X4600 M2 server is a compact (4 rack units), energy-efficient system. Our machine houses 8 AMD Opteron 8218 Dual-Core processors with 2.6 GHz, for a total of 16 cores, each with 1 MB Level 2 cache. CPUs are connected to each other by a HyperTransport link running at 8 GB/seconds.

It includes 32GB of main memory and two SAS (serial attached SCSI) harddisks. Electrical power is provided by four hot-swappable power supplies, redundant in 2+2 configuration, with 850W each. Operating System is Solaris 10 x86.

BERTA: Sun Fire X4500

BERTA: Sun Fire X4500

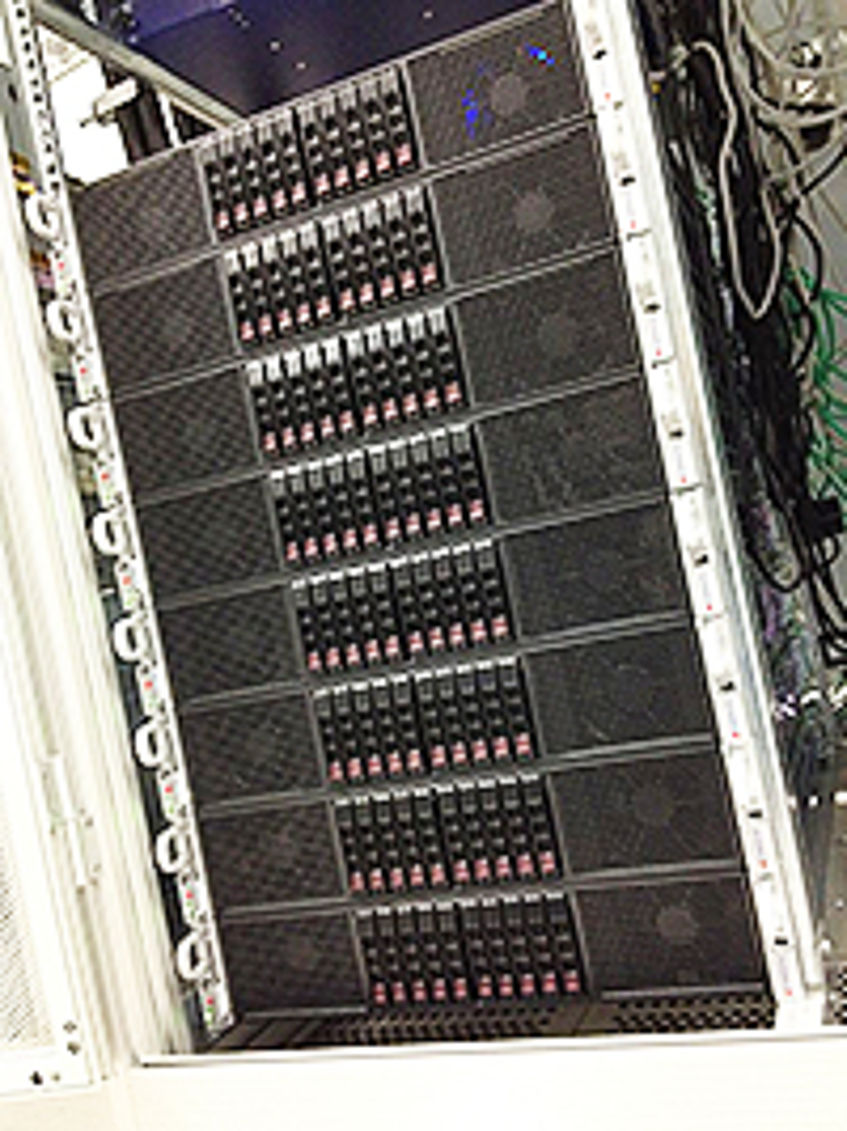

The Sun Fire X4500 combines a powerful four-core x64 server with up to 24 TB of storage in a compact format (4 rack units). It features 2 AMD Opteron 285 Dual-Core processors with 2.6 GHz and 16 GB of main memory. The 48 3.5-inch SATA II disks with 500 GB each make up for a total of 24 TB of raw disk space. Power consumption is 1800W, provided by dual redundant power supplies.

The machine runs Solaris 10 x86 and makes use of Software RAID implemented by the Solaris ZFS file system.